Watch out! You're reading a series of articles

- Logdy Pro Part I: The Problem

The article clarifies the problems being solved by Logdy Pro and a way it does that

- (currently reading) Logdy Pro Part II: Storage

The article uncovers how Logdy Pro is able to achieve 15-20x compression rate while still maintain fast search and query capabilities

- Logdy Pro Part III: Benchmark

The article presents comprehensive benchmark results demonstrating Logdy Pro's performance metrics and storage efficiency compared to industry alternatives.

Logdy Pro Part II: Advanced Log Compression and Storage Architecture

Revisiting the Log Management and Compression Challenge

In Part I, we explored the fundamental challenges of log management and introduced Logdy Pro as a solution that balances performance, cost-efficiency, and operational simplicity. In this article, we'll dive deep into Logdy Pro's innovative log compression and storage architecture, revealing the specialized techniques that enable its remarkable 15-20x compression ratio while maintaining high-speed query performance for compressed logs.

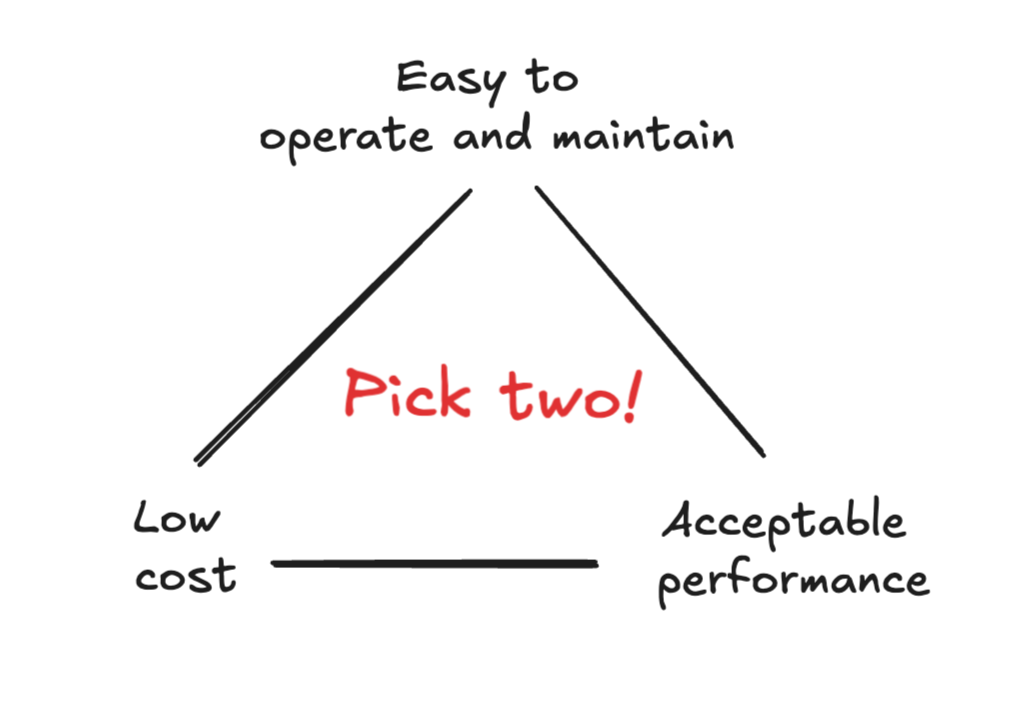

Let's first revisit the "pick two" problem from the previous article:

The Database Trade-off Triangle

The Database Trade-off TriangleThe Problem with General-Purpose Log Storage and Compression Solutions

In today's technology landscape, most databases and log storage engines attempt to cover as many use cases as possible, often sacrificing optimal log compression efficiency. We have systems that simultaneously:

- Support both SQL and NoSQL workloads

- Provide vector search capabilities

- Maintain ACID compliance

- Handle time-series data

- Offer full-text search

- Support geospatial queries

This "one-size-fits-all" approach inevitably leads to compromises. While these systems excel at versatility, they often sacrifice efficiency in specific domains.

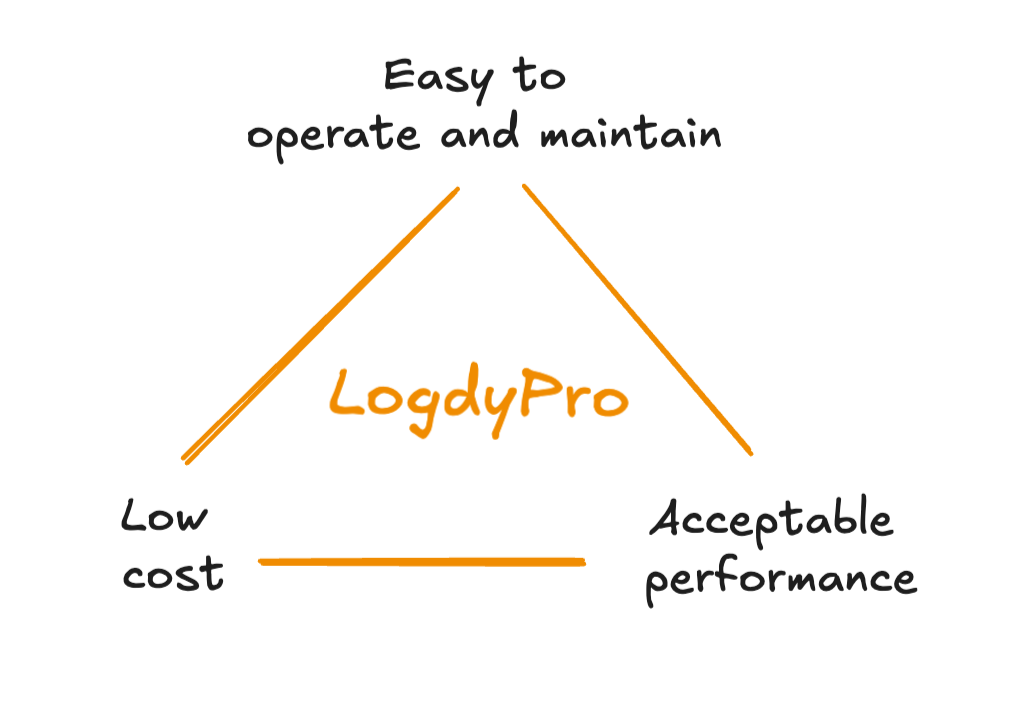

Logdy Pro's Balanced Approach

Logdy Pro's Balanced ApproachThe Cost Misconception of Inefficient Log Compression

We often hear that "storage is cheap," "memory is cheap," and "CPU is cheap." While hardware costs have indeed decreased over time, the total cost of ownership for log data systems remains significant, especially at scale. Effective logs compression becomes increasingly important as data volumes grow.

In today's business landscape, many companies prioritize rapid growth over immediate profitability, often disregarding operational costs in the short term. This economic mindset has influenced software development, where applications built for speed, performance, and broad use-case coverage are frequently valued more highly than those optimized for operational simplicity and cost efficiency.

However, as organizations mature and scale, the inefficiencies of general-purpose solutions become increasingly apparent in their bottom line. This is particularly true for log management, where data volumes grow relentlessly with system usage.

Specialized Storage and Compression for Log Data

The Genesis of Logdy Pro's Log Compression Engine

When developing the open-source version of Logdy, it became clear that simply polling underlying log files wouldn't scale beyond development workflows. For production environments with terabytes of log data, a more sophisticated log compression approach was needed.

In August 2024, I began researching specialized log compression and storage solutions. The goal was to find or build something that could better balance the trade-off triangle for this specific domain. I conducted extensive benchmarks with various log compression technologies, including SQLite with compression plugins, but none provided the optimal combination of logs compression ratio, query performance, and operational simplicity I was seeking.

This led to the development of a custom storage engine specifically designed for log data. Rather than trying to be a general-purpose database, Logdy Pro's storage engine is purpose-built for the unique characteristics of logs.

The Unique Characteristics of Log Data for Optimal Compression

Log data has several distinctive properties that make it amenable to specialized log compression and storage optimizations:

Immutability — Once a log entry is produced, it is never modified or deleted (except through retention policies)

Time-indexed nature — Every log entry has an associated timestamp that is critical to its meaning and utility

Temporal ordering — Log entries are typically processed in chronological order (though they may occasionally arrive out of sequence)

High repetition — Log entries describe events within a finite state machine (your application). Since applications have a limited set of execution paths, log patterns are highly repetitive, making them ideal candidates for advanced compression techniques

Structured format — Modern logs are typically formatted as JSON or can be parsed into structured formats, enabling field-level operations

Log Data Access Patterns

Equally important to the data characteristics are the typical access patterns for log data:

Time-bounded queries — Every log query includes a time range constraint (e.g., "show me errors from the last hour")

Mixed query types — Both full-text searches ("find all logs containing 'connection timeout'") and field-specific queries ("show logs where status_code=500") are common

Limited aggregation needs — Unlike analytics databases, log storage typically requires only basic aggregations (count, distinct values, etc.)

Self-contained entries — Log entries contain all relevant context, eliminating the need for complex joins

Read-heavy during investigations — Log data has a highly skewed access pattern: it's written continuously but read intensively only during investigations (read/write ratio of approximately 1:99)

Bulk reads — When logs are queried, users typically scan large portions of data to understand context and sequence of events

The Logdy Pro Log Compression and Storage Architecture

By focusing exclusively on log data and its access patterns, Logdy Pro's log compression system can eliminate many features typically found in general-purpose databases while optimizing for maximum compression efficiency:

- No need for complex transaction support

- No update or delete operations (except through retention policies)

- No write-ahead logging (WAL)

- No complex query optimizer for joins

- No multi-table schema management

Instead, Logdy Pro implements a highly specialized append-only storage system with powerful querying capabilities specifically designed for log data.

Advanced Log Compression Techniques

Let's examine how Logdy Pro achieves its remarkable 15-20x logs compression ratio by looking at a typical log entry and applying specialized log compression methods:

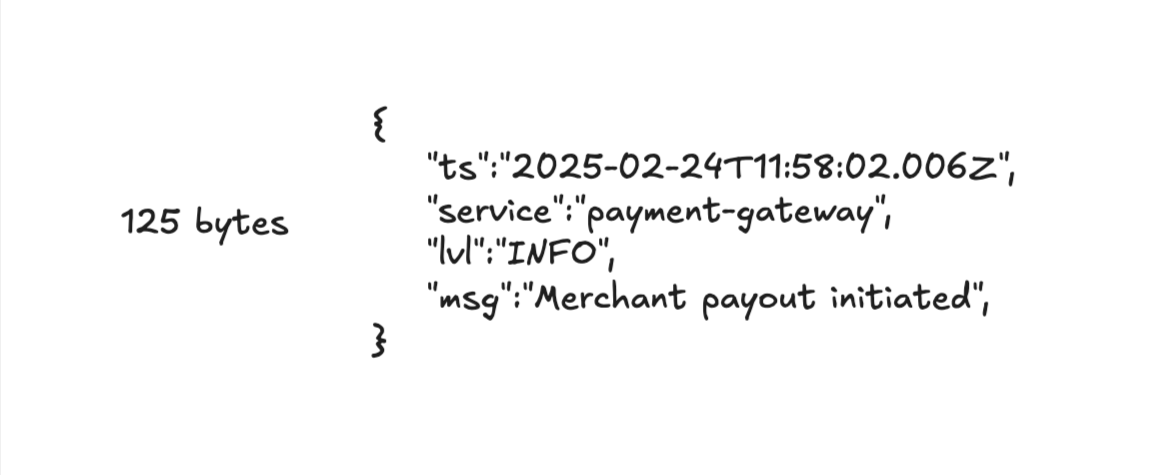

Standard JSON Log Format

Standard JSON Log FormatIn this standard JSON format, we can identify several inefficiencies:

- Structural overhead — JSON syntax (braces, quotes, commas) adds significant overhead

- Repeated property names — Field names like "ts", "service", "lvl" are repeated in every log entry

- Inefficient timestamp encoding — The timestamp uses 24 bytes as text when it could be represented much more efficiently

- Repeated field values — Values like "payment-gateway", "INFO", etc. appear frequently across log entries

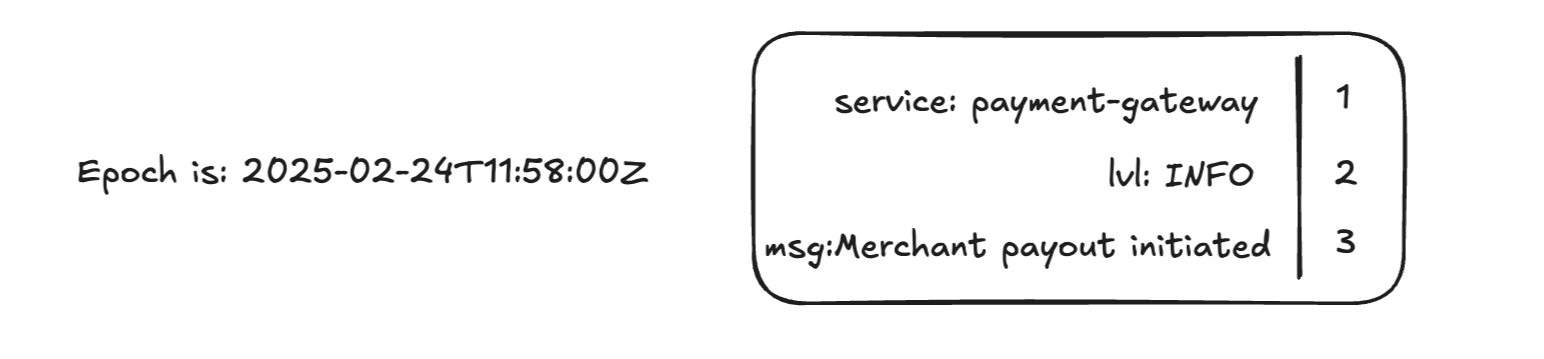

Step 1: Eliminate JSON Overhead for Better Log Compression

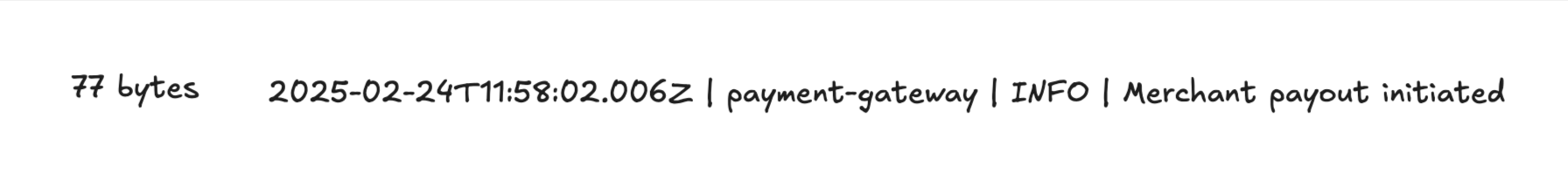

The first log compression optimization removes the JSON structure while preserving all information by storing values in a predefined order:

Removing JSON Overhead

Removing JSON OverheadThis approach already reduces size significantly, but we can go much further.

Step 2: Dictionary Encoding and Numeric Representation for Enhanced Compression

Next, Logdy Pro's log compression engine applies dictionary encoding to convert repeated strings to numeric identifiers and represents timestamps as milliseconds since a custom epoch:

Dictionary Encoding and Numeric Representation

Dictionary Encoding and Numeric RepresentationThe dictionary maps each unique string to a numeric ID, dramatically reducing storage requirements for repeated values. The timestamp is converted from a 24-byte string to a compact numeric representation.

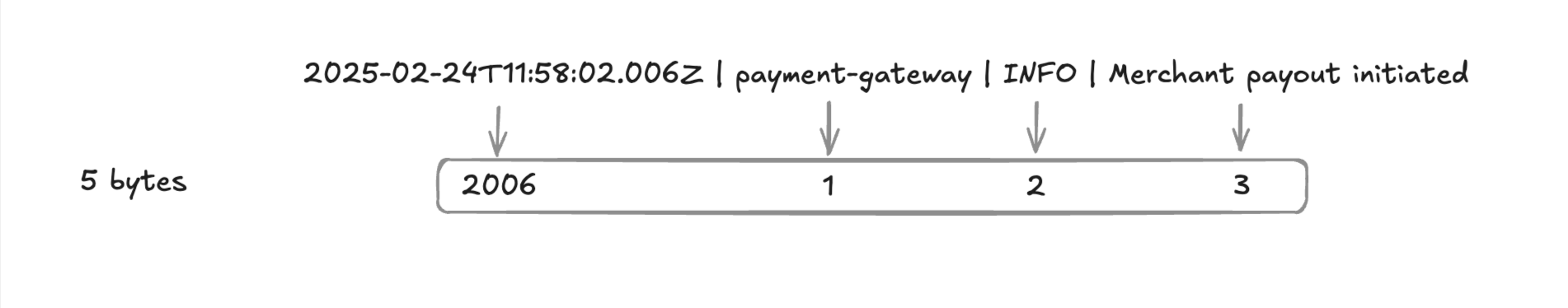

Step 3: Numeric Encoding of Log Entries for Maximum Compression

After applying these log compression transformations, our log entry is reduced to a simple sequence of numbers:

Numeric Encoding of Log Entry

Numeric Encoding of Log EntryThe original 125-byte JSON entry is now represented by just 5 bytes (one 16-bit unsigned integer and three 8-bit unsigned integers):

2006: Milliseconds since epoch (timestamp)1: Dictionary ID for "payment-gateway" (service)2: Dictionary ID for "INFO" (level)3: Dictionary ID for "Merchant payout initiated" (message)

This transformation achieves a 25x reduction in size while actually improving query performance, as numeric comparisons are faster than string operations.

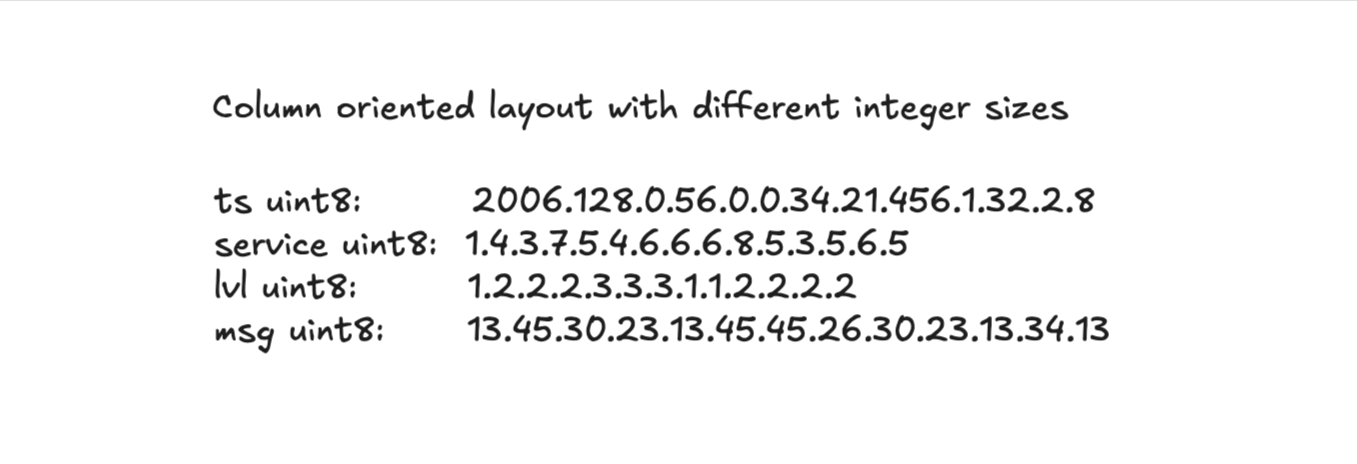

Step 4: Columnar Storage Organization for Optimized Log Compression

Rather than storing these numeric sequences row by row, Logdy Pro's log compression system organizes them in a columnar format for better compression efficiency:

Columnar Storage Organization

Columnar Storage OrganizationThis columnar approach provides several advantages:

- Better compression, as similar values are stored together

- More efficient queries that only need to access relevant columns

- Improved cache locality for column-specific operations

Step 5: Advanced Log Compression Encoding Techniques

Logdy Pro applies additional specialized log compression encoding techniques to further reduce storage requirements:

Double-Delta Encoding for Timestamps

- Instead of storing absolute time values, store the differences between consecutive timestamps

- For example, if logs are 10ms apart, store "10" instead of the full timestamp

- This is particularly effective because log timestamps often have regular patterns

Variable-Width Integer Encoding

- Use the minimum number of bits needed to represent each integer

- Small values (common in delta encoding) can be stored in just a few bits

- Implement bit-packing to store multiple small integers in a single machine word

Block-level Log Compression

- Apply general-purpose compression algorithms (like ZStandard) to blocks of already-optimized data

- This captures remaining patterns that dictionary and delta encoding might miss

- Creates a multi-layered log compression approach for maximum efficiency

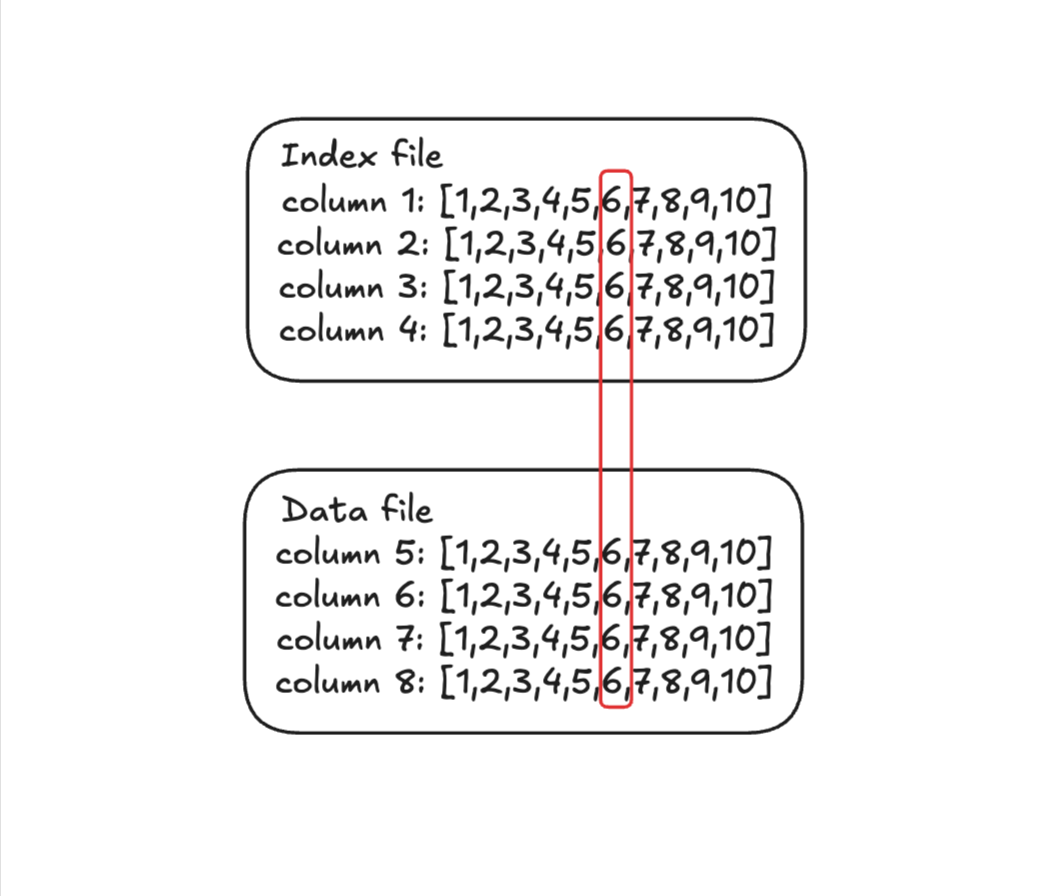

The Two-File Log Compression Storage System

In practice, Logdy Pro organizes data into two primary files that work together to provide both optimal logs compression and query efficiency:

Index File

- Contains metadata and pointers to the actual log data

- Stores time-based indices and field mappings

- Enables fast lookups and efficient querying

- Organized as lists of integers where positions map directly to the Data file

- Typically 30-40% of the total storage size

Data File

- Stores the actual log content in highly compressed columnar format

- Applies specialized log compression algorithms (like ZStandard) to each column

- Achieves higher logs compression ratios than general-purpose compression methods

- Supports random access through position-based lookups even with compressed data

The two files work together through position-based addressing:

Position-Based Addressing Between Index and Data Files

Position-Based Addressing Between Index and Data FilesWhen executing a query, Logdy Pro:

- Uses the Index file to quickly identify which log entries match the query criteria

- Retrieves only the necessary data from the Data file using the corresponding positions

- Reconstructs the original log entries by combining the retrieved data with dictionary information

This approach provides both space efficiency and query performance by:

- Minimizing I/O operations to only the relevant portions of data

- Leveraging the columnar format for efficient filtering

- Using the index structure to avoid full scans when possible

Time-Based Retention and Data Lifecycle Management

An essential feature of any log management system is the ability to efficiently handle data retention. Logdy Pro implements a flexible time-based retention policy that:

- Automatically removes data older than a specified timeframe

- Optionally archives older data to lower-cost storage (like S3 or cold storage)

- Helps manage storage costs and comply with data retention regulations

The retention mechanism works seamlessly with the storage architecture:

- Data is organized into time-based chunks (typically daily or hourly segments)

- Each chunk has its own Index and Data files

- When a chunk exceeds the retention period, its files can be deleted or archived

- This approach avoids storage fragmentation and maintains query performance

- No complex reindexing or compaction is needed when removing old data

This time-based chunking also enables tiered storage strategies:

- Keep recent logs (e.g., last 7 days) on fast local storage

- Move older logs (e.g., 8-30 days) to network storage

- Archive historical logs (e.g., 31+ days) to object storage

- All tiers remain queryable through the same interface

Performance Implications of Advanced Log Compression

The specialized log compression and storage architecture of Logdy Pro has significant performance implications:

Query Performance

Different query types benefit from different aspects of the architecture:

Time-range queries (e.g., "logs from the last hour")

- Extremely fast due to time-based chunking and indexing

- Typically complete in milliseconds regardless of total data volume

Field-specific queries (e.g., "status_code=500")

- Benefit from columnar storage and dictionary encoding

- Performance scales with the selectivity of the filter

- Typically complete in hundreds of milliseconds to seconds

Full-text searches (e.g., "connection timeout")

- Require scanning message fields

- Still benefit from compression and columnar storage

- Performance depends on the data volume being searched

- Typically complete in seconds to tens of seconds for large datasets

Resource Efficiency Through Optimized Log Compression

The log compression storage architecture is designed for minimal resource consumption:

- Memory efficiency — Queries don't require loading entire datasets into memory

- CPU efficiency — Simple query execution model without complex optimization

- I/O efficiency — Reads only the necessary columns and chunks

- Storage efficiency — 15-20x reduction in storage requirements through specialized logs compression techniques

This efficiency allows Logdy Pro to run effectively on modest hardware (2-4GB RAM, single CPU) even when managing terabytes of log data.

Conclusion: Specialized Log Compression for Specialized Needs

By focusing specifically on the unique characteristics of log data and its access patterns, Logdy Pro achieves remarkable efficiency in both logs compression and query performance. The 15-20x logs compression ratio doesn't come at the expense of speed—in fact, the columnar storage approach and specialized indexing enhance query performance for the most common log analysis operations, even when working with highly compressed log data.

This specialized log compression approach demonstrates that when you deeply understand your data and its usage patterns, you can design solutions that break out of the traditional database trade-off triangle. Logdy Pro doesn't try to be everything for everyone—instead, it excels at doing one thing exceptionally well: compressing, storing, and querying log data efficiently.

In the next article, we'll examine real-world log compression benchmark results that demonstrate Logdy Pro's performance characteristics across various datasets and query patterns, showing how effective log compression can dramatically improve both storage efficiency and query performance.

Interested in using LogdyPro? Let's get in contact!

Watch out! You're reading a series of articles

- Logdy Pro Part I: The Problem

The article clarifies the problems being solved by Logdy Pro and a way it does that

- (currently reading) Logdy Pro Part II: Storage

The article uncovers how Logdy Pro is able to achieve 15-20x compression rate while still maintain fast search and query capabilities

- Logdy Pro Part III: Benchmark

The article presents comprehensive benchmark results demonstrating Logdy Pro's performance metrics and storage efficiency compared to industry alternatives.