Watch out! You're reading a series of articles

- (currently reading) Logdy Pro Part I: The Problem

The article clarifies the problems being solved by Logdy Pro and a way it does that

- Logdy Pro Part II: Storage

The article uncovers how Logdy Pro is able to achieve 15-20x compression rate while still maintain fast search and query capabilities

- Logdy Pro Part III: Benchmark

The article presents comprehensive benchmark results demonstrating Logdy Pro's performance metrics and storage efficiency compared to industry alternatives.

Logs Compression: Solving the Log Management Challenge with Logdy Pro

The Log Management and Compression Challenge

Imagine a situation of a mid-size e-commerce company managing its own infrastructure:

- A growing company operates its own servers in a data center to maintain control and reduce cloud service costs

- They generate massive amounts of log data — often 10-50GB per day (it's not uncommon to see over 1000GB per day too) — from their web servers, application servers, and databases, creating an urgent need for effective logs compression

- The engineering team needs to quickly diagnose performance issues, track user activity, and ensure security compliance

- Existing log management solutions are proving expensive (often $5,000-$10,000/month for their data volume)

- They're concerned about sending sensitive customer data to third-party cloud services due to compliance requirements

- They need a system that can handle large amounts of data, allow fast searching, and enable data to remain on their own hardware

Attempted Log Compression Solutions and Their Limitations

So far, they've tried several approaches, each with significant drawbacks for effective logs compression

1. ELK Stack (Elasticsearch, Logstash, Kibana)

- The open source version satisfies many of their needs for search and visualization

- However, it proved to be costly to run a cluster of Elasticsearch machines (requiring 3+ nodes for reliability)

- Resource requirements are substantial: 16-32GB RAM per node for decent performance

- Maintenance is time-consuming, requiring dedicated DevOps resources

- Schema management becomes complex as log formats evolve

- Limited native compression capabilities — storage efficiency remains a challenge

2. Log Files on Disk

- Using basic command-line tools (grep, awk) or scripting languages (Python) to analyze log files

- Simple to implement and very low cost

- Extremely cumbersome for complex searches without any indexing

- Finding specific information requires scanning entire files, often taking minutes or hours

- No structured querying capabilities for JSON fields

- Limited retention due to disk space constraints

- Standard compression tools (gzip, bzip2) provide only modest compression ratios for log data

3. Storing Logs in Existing Databases

- The company already operates databases for orders, inventory, etc.

- These databases could theoretically store application logs as well

- This approach was rejected due to:

- Isolation concerns — the engineering team didn't want logs potentially impacting production data

- Performance impact on critical business systems

- Schema rigidity making it difficult to accommodate varying log formats

- High storage costs for the volume of log data

- Inefficient compression — general-purpose databases aren't optimized for log data compression

The Fundamental Trade-off in Log Compression

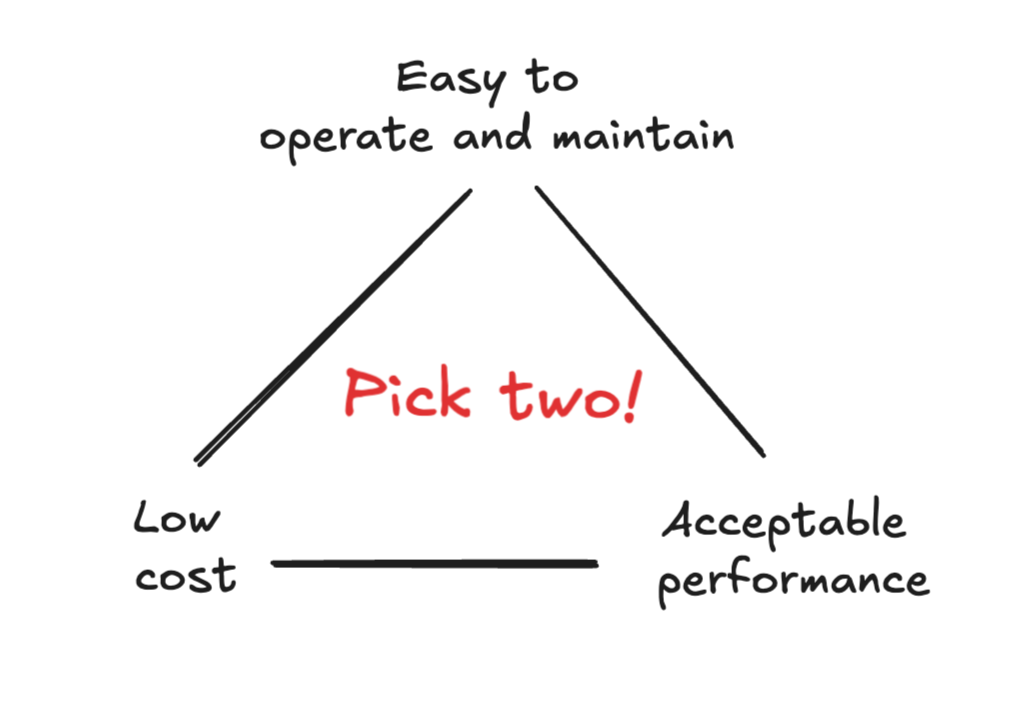

In the end, the company found itself facing a classic "pick two" scenario between three critical characteristics:

The Log Compression and Management Trade-off Triangle

The Log Compression and Management Trade-off TriangleEach available solution forced them to sacrifice at least one of these crucial aspects:

- Performance: How quickly can you search and analyze compressed logs?

- Cost-efficiency: How affordable is the solution in terms of infrastructure, storage, and maintenance?

- Operational simplicity: How easy is it to set up, maintain, and use day-to-day?

The team concluded that no existing logs compression solution adequately covered all three characteristics. Each option on the market excelled in some areas but fell short in others, forcing uncomfortable compromises.

Introducing Logdy Pro: Advanced Logs Compression Technology

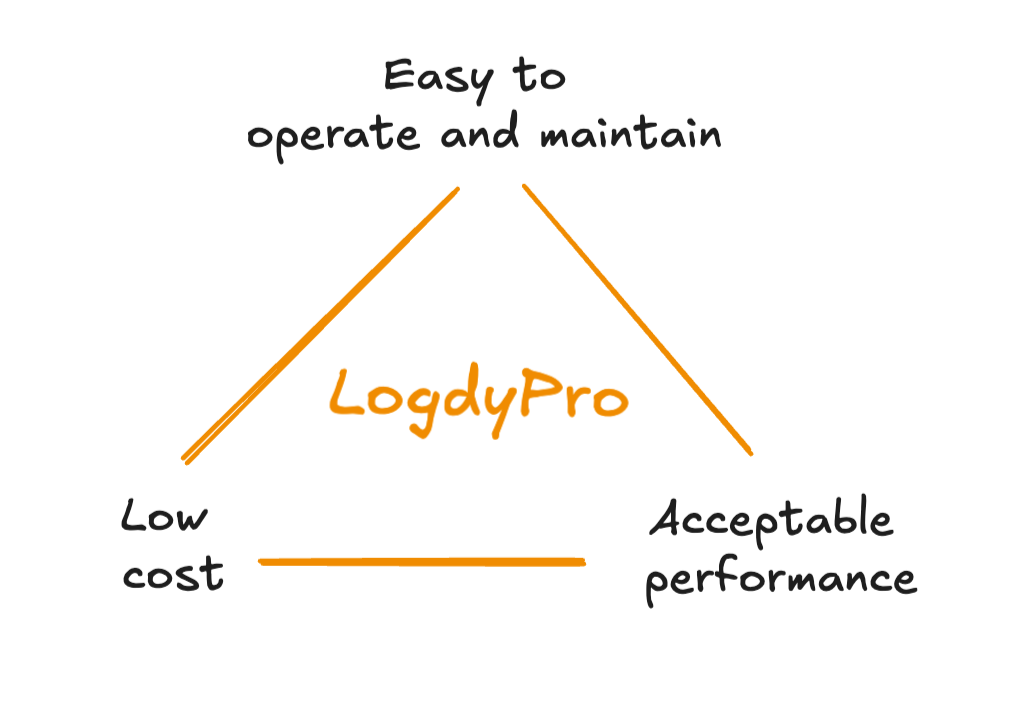

This is where Logdy Pro changes the narrative by offering a balanced approach that addresses all three corners of the trade-off triangle with its innovative logs compression technology:

Logdy Pro's Balanced Logs Compression Approach

Logdy Pro's Balanced Logs Compression ApproachOperational Simplicity

- No complicated cluster setup — Run as a single binary with minimal configuration

- Flexible storage options — Keep compressed log files on local drives or in object storage like S3

- Schema-free operation — No need to define indexes or learn a specialized query language

- Minimal resource requirements — Runs efficiently on modest hardware (2-4GB RAM)

- Zero maintenance — No index optimization, shard management, or cluster coordination

- Seamless compression — Log compression happens automatically without manual intervention

Cost Efficiency Through Advanced Logs Compression

- Exceptional logs compression — Up to 20x reduction in storage size compared to raw logs

- Reduced infrastructure costs — No need for expensive, high-memory instances

- Longer retention periods — Keep more historical data within the same storage budget

- Minimal memory footprint — Doesn't require loading entire datasets into memory

- Self-hosted control — No recurring SaaS fees or data transfer costs

- Optimized storage format — Purpose-built for log data compression

Acceptable Performance

- Optimized for common log queries — Fast time-based filtering and field-specific searches

- Columnar storage design — Efficient for analytical queries across large datasets

- Smart indexing strategy — Balances index size with query performance

- Sufficient for real-world needs — While not designed for sub-second queries on terabytes of data, it provides the performance engineering teams need for log analysis, monitoring, and auditing

- Direct query on compressed data — No need to decompress entire files to search

Logdy Pro isn't trying to be the fastest solution on the market. Instead, it delivers a carefully balanced approach that provides sufficient performance while excelling at operational simplicity and logs compression efficiency — the areas where other solutions often fall short.

The additional benefit is that you retain complete control over your data since Logdy Pro is a self-hosted solution, addressing data sovereignty and compliance requirements.

Real-World Logs Compression Implementation

Let's see how our e-commerce company implemented Logdy Pro's logs compression technology in their environment. They operate a fleet of VPS and dedicated servers that handle a relatively static load. They don't use Kubernetes, preferring to isolate customer workloads by maintaining excess capacity.

After evaluating their needs, they implemented Logdy Pro using two different deployment patterns for different use cases:

1. Scheduled Nightly Logs Compression Jobs

For their application servers that generate standard log files, they implemented a scheduled archiving approach:

0 2 * * * /bin/logdypro archive --config=/home/user/.logdy/config-app.yml --input=/var/log/app.log --output-dir=/var/logdyHow logs compression works:

- A CRON job runs nightly at 2 AM

- The job compresses the previous day's log files using Logdy Pro's highly efficient logs compression algorithm

- The configuration file (

config-app.yml) contains parsing rules and field mappings specific to their application logs - Compressed logs are stored in the

/var/logdydirectory - Log rotation is handled automatically, with the original logs being truncated after successful compression

Querying the compressed logs:

- Engineers can access compressed logs by running:

/bin/logdy read /var/logdy - This opens either the CLI browser or Web UI depending on preference

- No persistent server is required — perfect for ad-hoc investigations

- Queries execute directly against the compressed log files

Benefits of this logs compression approach:

- Minimal resource usage — no persistent processes running

- Simple integration with existing logging pipelines

- Reduced storage requirements — 90% smaller than the original log files

- Familiar command-line interface for the engineering team

- Efficient storage utilization through advanced logs compression

2. Logdy Pro Daemon for Real-Time Access to Compressed Logs

For their critical services where real-time log access is important, they deployed Logdy Pro as a daemon process:

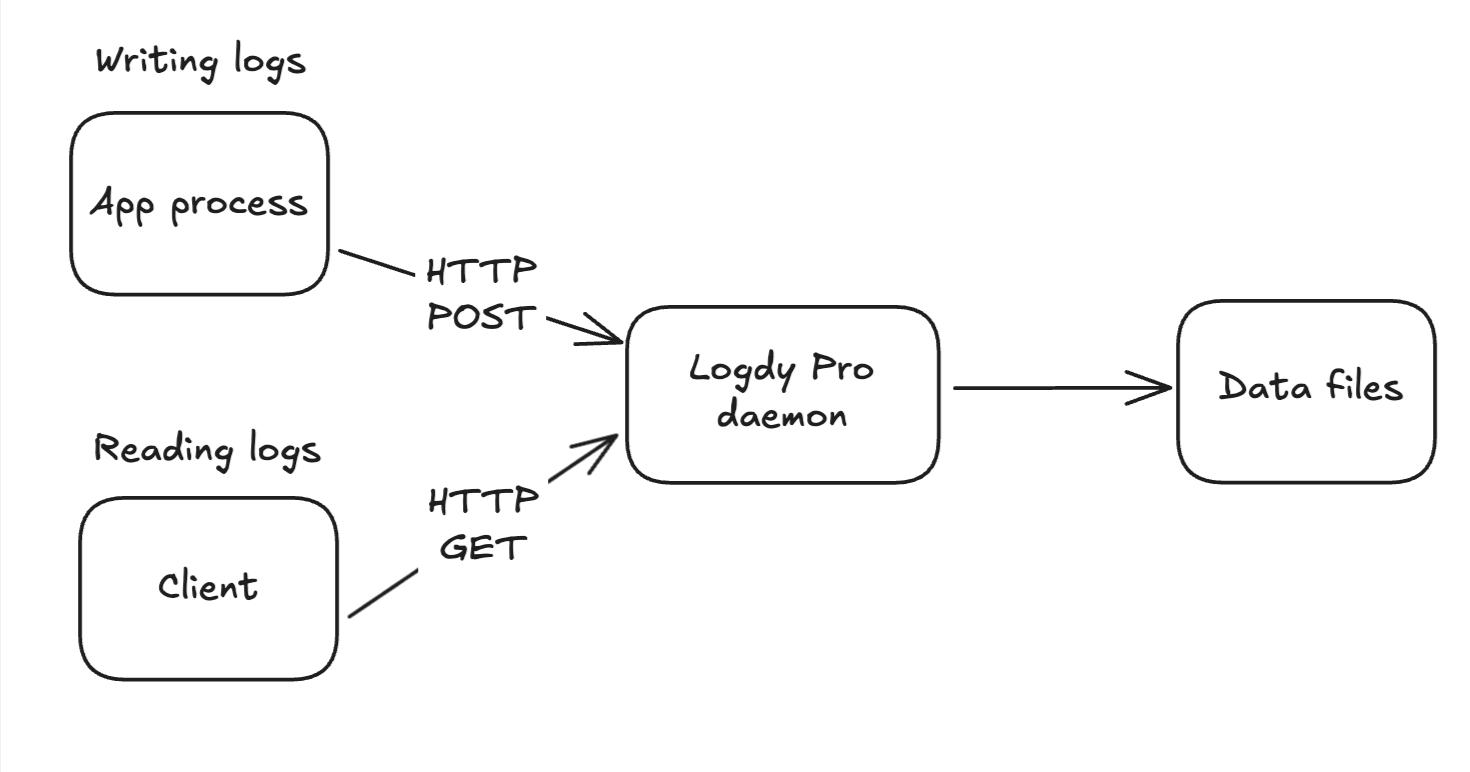

Single-Node Logdy Pro Logs Compression Daemon Setup

Single-Node Logdy Pro Logs Compression Daemon SetupHow real-time logs compression works:

- A persistent Logdy Pro daemon runs as a service on each application server

- Applications send log entries directly to the daemon via HTTP API

- Log entries are immediately available for querying

- The daemon accumulates entries up to a configurable threshold (either count or size) before compressing and writing to immutable storage files

- A web interface is available for querying and visualization of compressed logs

Benefits of this logs compression approach:

- Real-time log visibility — new entries are queryable immediately

- Direct integration with applications — no intermediate files

- Reduced I/O overhead — batched writes to storage

- Always-available query interface for operations teams

- Continuous logs compression for optimal storage efficiency

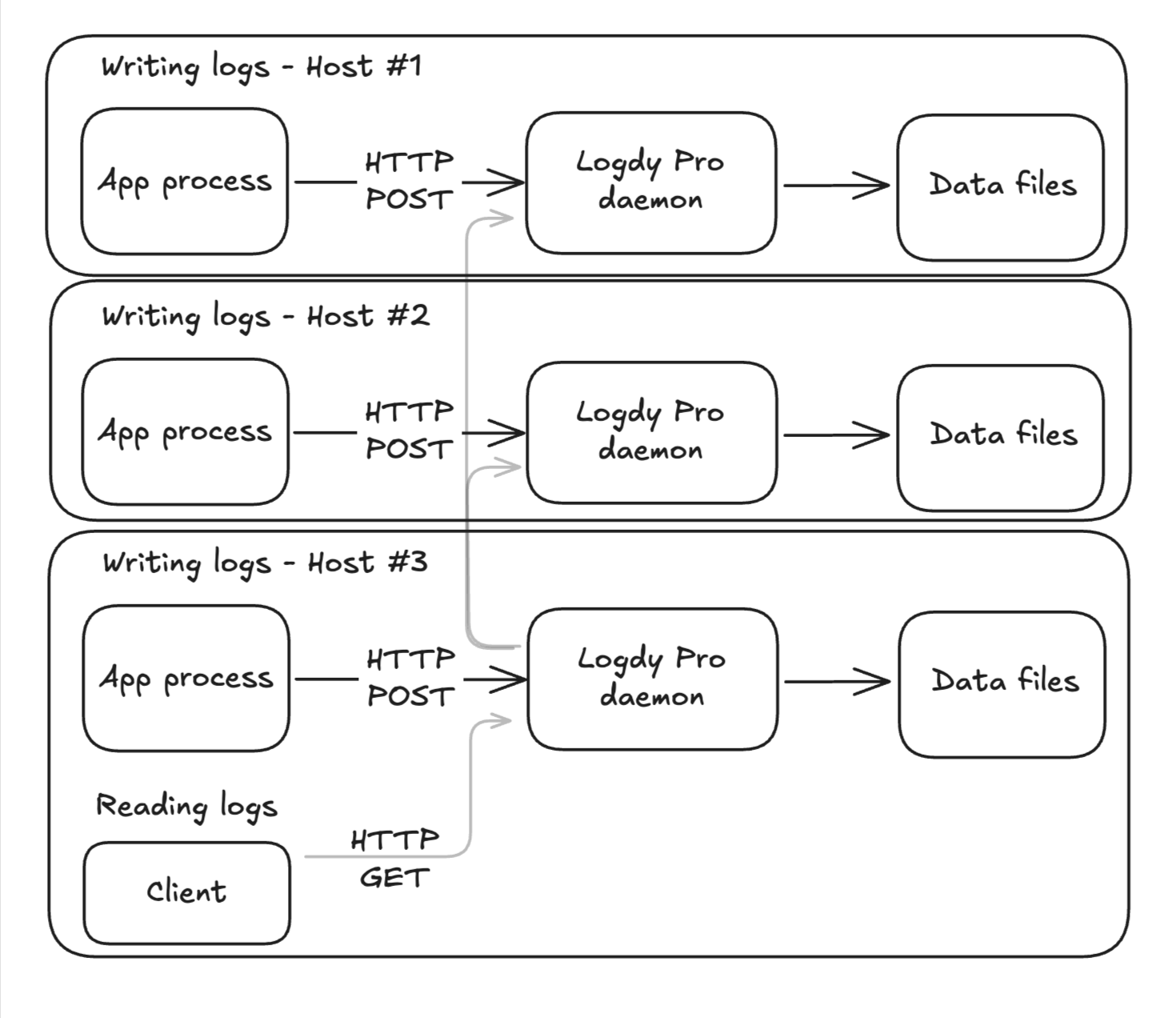

For their larger production environment, they implemented a distributed logs compression setup:

Distributed Logdy Pro Logs Compression Setup

Distributed Logdy Pro Logs Compression SetupHow the distributed logs compression setup works:

- Each host machine runs its own Logdy daemon process

- Each daemon is responsible for collecting, compressing, and storing logs from its local applications

- One designated instance serves as a query coordinator

- The coordinator has knowledge of all other instances and the compressed log data they contain

- When querying through the coordinator, users get a unified view across all machines

- Results are aggregated and presented seamlessly

Benefits of the distributed logs compression approach:

- Scalability — each node handles its own logs compression without central bottlenecks

- Fault tolerance — if one node goes down, others continue functioning

- Network efficiency — logs are compressed and stored where they're generated

- Unified querying — single interface to access all compressed logs

- Resource isolation — each node's resource usage is contained

Conclusion: The Power of Efficient Logs Compression

The e-commerce company's experience demonstrates how Logdy Pro's logs compression technology effectively addresses the log management trilemma. By providing a balanced approach to operational simplicity, cost efficiency, and performance, Logdy Pro has enabled them to:

- Reduce their log storage costs by over 90% through advanced logs compression

- Simplify their operational overhead with automated compression workflows

- Maintain sufficient performance for their log analysis needs even with compressed data

- Keep sensitive data on their own infrastructure with self-hosted logs compression

- Scale their log compression and management approach as they grow

In the next article, we'll dive deeper into how Logdy Pro's storage architecture achieves these impressive logs compression ratios while maintaining query performance.

FAQs About Logs Compression

Q: How does Logdy Pro's logs compression compare to standard tools like gzip?

A: Logdy Pro achieves up to 20x compression ratios compared to raw logs, significantly outperforming general-purpose compression tools like gzip (typically 5-10x) by using specialized algorithms designed specifically for log data patterns.

Q: Does logs compression affect query performance?

A: Logdy Pro's unique approach allows querying directly on compressed data without full decompression, maintaining good query performance while drastically reducing storage requirements.

Q: How much historical log data can I retain with Logdy Pro's compression?

A: With 20x compression ratios, you can typically store 20 times more historical log data in the same storage footprint, extending retention periods from weeks to months or even years depending on your volume.

Q: Is Logdy Pro's logs compression lossless or lossy?

A: Logdy Pro uses lossless compression techniques, ensuring that all original log data is preserved and can be fully reconstructed when needed.

Interested in using LogdyPro? Let's get in contact!

Watch out! You're reading a series of articles

- (currently reading) Logdy Pro Part I: The Problem

The article clarifies the problems being solved by Logdy Pro and a way it does that

- Logdy Pro Part II: Storage

The article uncovers how Logdy Pro is able to achieve 15-20x compression rate while still maintain fast search and query capabilities

- Logdy Pro Part III: Benchmark

The article presents comprehensive benchmark results demonstrating Logdy Pro's performance metrics and storage efficiency compared to industry alternatives.